So now that I’ve got your attention…I wanted to put together some thoughts around a design principal of what I call the acceptable unit of loss, or AUL.

Acceptable Unit of Loss: def. A unit to describe the amount of a specific resource that you’re willing to lose

Sounds pretty simple doesn’t it? But what does it have to do with data networking?

White Boxes and Cattle

2015 is the year of the white box. For those of you who have been hiding under a router for the last year, a white box is basically a network infrastructure device, right now limited to switches, that ships with no operating system.

The idea is that you:

- Buy some hardware

- Buy an operating system license ( or download an open source version)

- Install the operating system on your network device

- Use DevOps tools to manage the whole thing

add Ice. Shake and IT operational goodness ensures.

Where’s the beef?

So where do the cattle come in? Pets vs. Cattle is something you can research elsewhere for a more thorough dealing, but in a nutshell, it’s the idea that pets are something that you love and care for and let sleep on the bed and give special treat to on Christmas. Cattle on the other hand are things you give a number, feed from a trough, and kill off without remorse if a small group suddenly becomes ill. You replace them without a second thought.

Cattle vs. Pets is a way to describe the operational model that’s been applied to the server operations at scale. The metaphor looks a little like this:

The servers are cattle. They get managed by tools like Ansible, Puppet, Chef, Salt Stack, Docker, Rocket, etc… which at a high level are all tools which allow for a new version of the server to be instantiated on a very specific configuration with little to no human intervention. Fully orchestrated,

Your servers’s start acting up? Kill it. Rebuild it. Put in back in the herd.

Now one thing that a lot of enterprise engineers seem to be missing is that this operational model is predicated on the fact that you’re application has been built out with a well thought out scale-out architecture that allows the distributed application to continue to operate when the “sick” servers are destroyed and will seamlessly integrate the new servers into the collective without a second thought. Pretty cool, no?

Are your switches Cattle?

So this brings me to the Acceptable Unit of Loss. I’ve had a lot of discussions with enterprise focused engineers who seem to believe that Whitebox and DevOps tools are going to drive down all their infrastructure costs and solve all their management issues.

“It’s broken? Just nuke it and rebuild it!” “It’s broken? grab another one, they’re cheap!”

For me, the only way that this particular argument that customers give me is if there AUL metric is big enough.

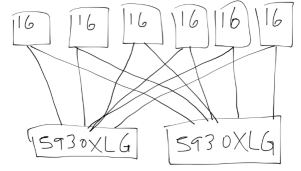

To hopefully make this point I’ll use a picture and a little math:

Consider the following hardware:

- HP C7000 Blade Server Chassis – 16 Blades per Chassis

- HP 6125XLG Ethernet Interconnect – 4 x 40Gb Uplinks

- HP 5930 Top of Rack Switch – 32 40G ports, but from the data sheet “ 40GbE ports may be split into four 10GbE ports each for a total of 96 10GbE ports with 8 40GbE Uplinks per switch.”

So let’s put this together

So we’ll start with

- 2 x HP 5930 ToR switches

For the math, I’m going to assume dual 5930’s with dual 6125XLGs in the C7000 chassis, we will assume all links are redundant, making the math a little bit easier. ( We’ll only count this with 1 x 5930, cool? )

- 32 x 40Gb ports on the HP 5930 – 8 x 40Gb ports saved per uplink ) = 24 x 40Gb ports for connection to those HP 6125XLG interconnects in the C7000 Blade Chassis.

- 24 x 40Gb ports from the HP 5930 will allow us to connect 6 x 6125XLGs for all four 40Gb uplinks.

Still with me?

- 6 x 6125XLGs means 6 x C7000 which then translates into 6*16 physical servers.

If we go with a conservative VM to server ratio of 30:1, that gets us to 2,880 VMs running on our little design.

How much can you lose?

So now is where you ask the question:

Can you afford to lose 2,880 VMs?

According to the cattle & pets analogy, cattle can be replaced with no impact to operations because the herd will move on with out noticing. Ie. the Acceptable Unit of Lose is small enough that you’re still able to get the required value from the infrastructure assets.

The obvious first objection I’m going to get is

“But wait! There are two REDUNDANT switches right? No problem, right?”

The reality of most of networks today is that they are designed to maximize the network throughput and efficient usage of all available bandwidth. MLAGG, in this case brought to you by HPs IRF, allows you to bind interfaces from two different physical boxes into a single link aggregation pipe. ( Think vPC, VSS, or whatever other MLAGG technology you’re familiar with ).

So I ask you, what are the chances that you’re running the unit at below 50% of the available bandwidth?

Yeah… I thought so.

So the reality is that when we lose that single ToR switch, we’re actually going to start dropping packets somewhere as you’ve been running the system at 70-80% utilization maximizing the value of those infrastructure assets.

So what happens to TCP based application when we start to experience packet loss? For a full treatment of the subject, feel free to go check out Terry Slattery’s excellent blog on TCP Performance and the Mathis Equation. For those of you who didn’t follow the math, let me sum it up for you.

Really Bad Things.

On a ten gig link, bad things start to happen at 0.0001% packet loss.

Are your Switches Cattle or Pets?

So now that we’ve done a bit of math and metaphors, we get to the real question of the day: Are you switches Cattle? Or are they Pets? I would argue that if your measuring your AUL in less that 2,000 servers, then you’re switches are probably Pets. You can’t afford to lose even one without bad things happening to your network, and more importantly the critical business applications that are being accessed by those pesky users. Did I mention they are the only reason the network exists?

Now this doesn’t mean that you can’t afford to lose a device. It’s going to happen. Plan for it. Have spares, Support Contracts whatever. But my point is that you probably won’t be able to go with a disposable infrastructure model like what has been suggested by many of the engineers I’ve talked to in recent months about why they want white boxes in their environments.

Wrap up

So are white boxes a bad thing if I don’t have a ton of servers and a well architected distributed application? Not at all! There are other reasons why white box could be a great choice for comparatively smaller environments. If you’ve got the right human resource pool internally with the right skill set, there are some REALLY interesting things that you can do with a white box switch running an OS like Cumulus linux. For some ideas, check out this Software Gone Wild podcast with Ivan Pepelnjak and Matthew Stone.

But in general, if your metric for Acceptable Unit of Loss is not measured in Data Centres, Rows, Pods, or Entire Racks, you’re probably just not big enough.

Agree? Disagree? Hate the hand drawn diagram? All comments welcome below.

If we don’t have credible data on the relative failure rates of cattle and pets, what difference does the color of the box make?

As a company founded with bare-metal networking in mind, we agree that the ALU (or sometimes called blast radius) of the network is extremely important. With that said, it continues to amaze us how networking professionals aren’t able to leverage the extensive deployment and monitoring tools that have been built for the ‘cattle’.

You rightly highlight that you don’t want to lose either of the two networking systems in your diagram. I’m not sure why you’ve equated bare-metal networking with increased blast radius, but we’ll leave that for another discussion. What I do want to offer up is… if one of the switches in your networks were to experience an issue, detecting and repairing is fundamental… what better tool chain to leverage for operations than one founded around bringing order to the chaotic application/server environment that you describe.

Growing up, I knew people whose most cherished pets were cows.

Hey JR!

Thanks so much for the comments. I don’t think we should be amazed that network professionals don’t embrace the tools built for “cattle”. Most network professionals disdain network management tools in general so it’s not something that keeps me up at night. What I’m really excite to see is how the CI/CD tool chains are being driven down into the network ops space which is forcing network progressionals to re-evaluate their accepted “truths”.

My point in this post was that many enterprise focused people are looking at whitebox in general as a way to cut cost and trying to rationalize their decision making process with supporting “facts” that just don’t hold water.

I think that Cumulus, and others, have a great opportunity to really shake up the entrenched network market with a new approach. One of the major issues I still see with using Linux as a network OS – and this extends to using the tool chains that were designed to bring order from the server chaos – is that these tools and OS were not purpose built for networking. Although the entire industry has been wanting to break the silos down, the silos did exist for a reason and I think we need to recognize that difference if we have a chance of making this new reality stick. I think that the server/app focused tool chains need to become network aware and understand the operational characteristics of things like routing protocols, BFD, MPLS, etc… before they become truly useful in the enterprise space.

Let’s not even go into question around how does a server OS running a network switch operating like a firewall get treated in a PCI Compliance Audit. 🙂

Definitely interesting times and I’m sure this will get worked out in time.

I’d just hoping that people start to move to solutions like Cumulus for the right reasons. The worst thing for everyone is for a faulty rationalization which results in a mis-aligned solution being implemented.

We all know that at the end of the day the customer will say ” XYZ product sucked” and not ” I did something dumb”. 🙂

@netmanchris

+1 on people picking technology solutions for the right reason (and with the right expectations)!

cheers,

JR

Pretty! This was an extremely wonderful article. Many thanks

for supplying this info.